You are here

Hanna Moosa's XAI Techniques Win RIPC 2022 Gold Award

Explainable Artificial Intelligence (XAI) technique provides transparency and explainability required from complex AI models to identify fraud in the healthcare sector in line with the European Union (EU) General Data Protection Regulation (GDPR) and ISO 26262 safety standards.

Billing for services, procedures, and/or supplies that were never rendered, charging for more expensive services than those actually provided, performing unnecessary services for financial gain, misrepresenting non–covered treatments as a medical necessity, and falsifying a patient's diagnosis to justify tests, surgeries, or other procedures are some of the most commonly reported health care frauds.

As Artificial Intelligence (AI) advances, it may be able to help analyse the massive frauds cases in healthcare system and effectively move forward with improved International Organization for Standardization (ISO) guidelines and safety requirements.

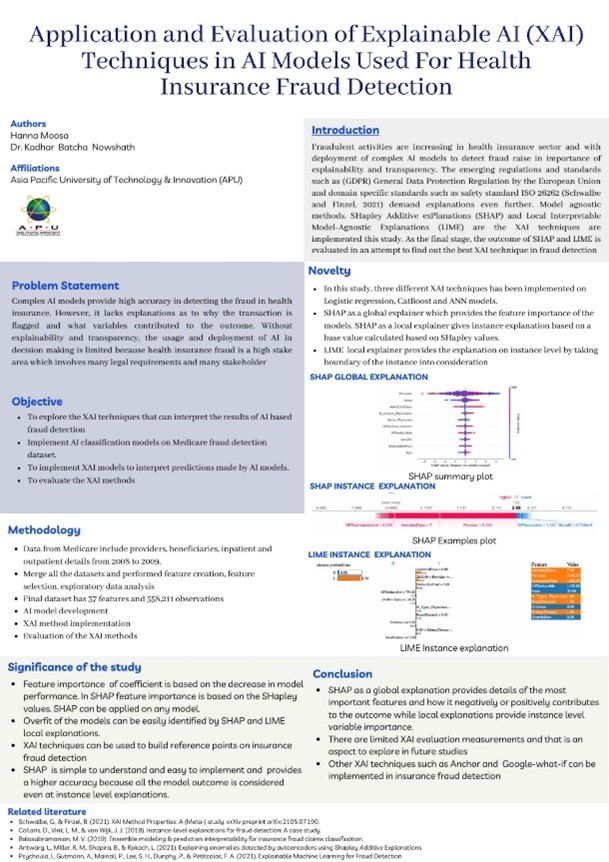

Hanna Moosa, Asia Pacific University of Technology & Innovation (APU) Master student in Data Science and Business Analytics, recently received a Gold Award for her final year project titled “Application and Evaluation of eXplainable AI (XAI) Techniques in AI Models Used for Health Insurance Fraud Detection” in the Research & Innovation Poster Competition (RIPC), Series 1/2022, under Master Science, Technology, Engineering, and Mathematics category.

RIPC organised by MNNF Network, Malaysia is one of the platforms for highlighting, recognising, and sharing students' final year projects, theses, or dissertations from various Malaysian universities, as well as to encourage a culture of research and innovation among students.

Hanna stated that she chose this study due to an increase in fraudulent activities and the deployment of complex AI models to detect fraud in the healthcare domain, as well as regulations such as the European Union's (EU) General Data Protection Regulation (GDPR) and domain-specific standards such as ISO 26262 Safety Standard.

She used a complex Explainable Artificial Intelligence (XAI) model (model agnostic methods) such as boosting models and deep learning models to detect fraud, and the XAI techniques are used on top of the AI models to provide the necessary explanations and increase the model's transparency.

However apart from the model global explanation provided by Shapley Additive Explanations (SHAP), her study also provides local instance-level explanation provided by SHAP as well as Local Interpretable Model-Agnostic Explanations (LIME), and finally, the outcomes of SHAP and LIME are evaluated to find the best XAI techniques in fraud detection.

“With the guidance of my mentor, Dr Nowshath Kadhar Batcha (Senior Lecturer of School of Computing), I was able to finish this project in around seven months, from research through implementation and documentation.

“He has been a tremendous help to me throughout the capstone project, and the poster was made based on the project's outcome, which is to establish trust between users and providers by utilising transparent technology while sticking to laws on data availability and interpretability,” she said.

With growing health care fraud cases, Hanna was keen to shake things up with her XAI technique designed to stand out from the masses of AI models, taking users into easy-to-use tools that can be implemented on any predictive model and offer the required explanations.

“I am glad to be able to successfully provide local and global explanations of the AI models using these two methods (SHAP and LIME),” she explained, adding, “I will continue to expand my knowledge in Data Science toolkits, explore XAI concepts, and contribute to reducing knowledge gap in this field.”